Research

My research interests are broadly in the domain of Virtual Reality and Embodiment. Currently I am focusing on multiple-embodiments, which are interfaces where users control multiple virtual bodies simultaneously. We are studying people's qualitative experiences in such interfaces, and the strategies people develop for controlling these interfaces. My current work seeks to understand how users can interact and coordinate between many bodies and connecting these concepts to Embodied Cognition and Phenomenology.

I also have interest in how VR can augment design processes, in particular the role that VR should play in early stage prototyping of design. Additionally, I am broadly interested in Graphics and Computer Vision research related to VR.

Previously I did research at the intersection of augmented reality and computer vision, studying interfaces for collecting in-situ training data and augmenting human awareness using computer vision.

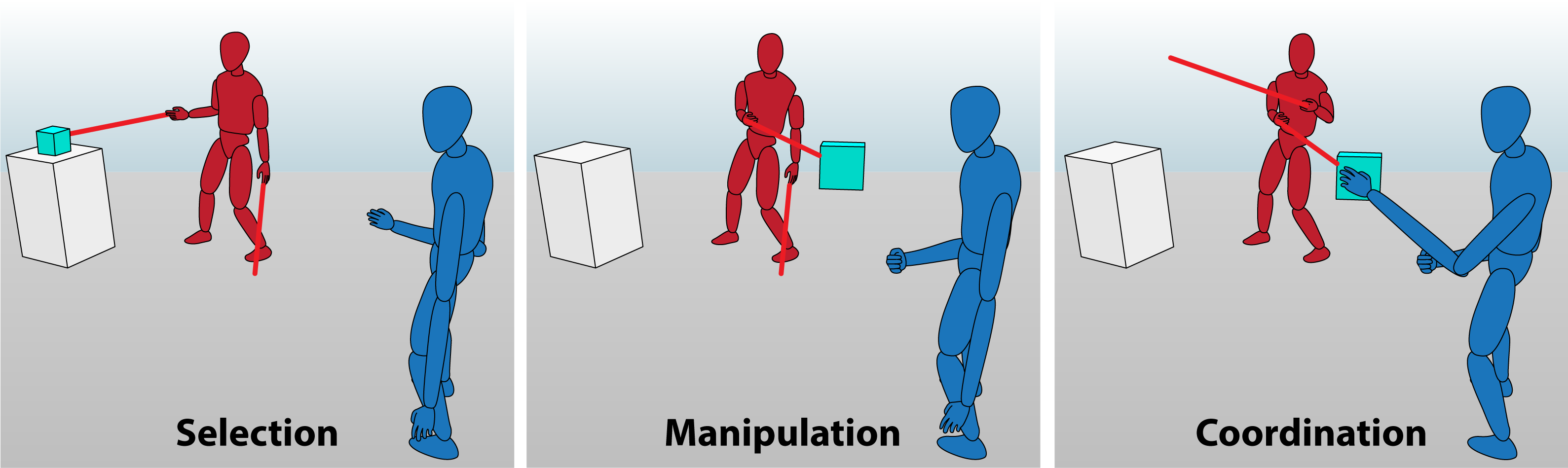

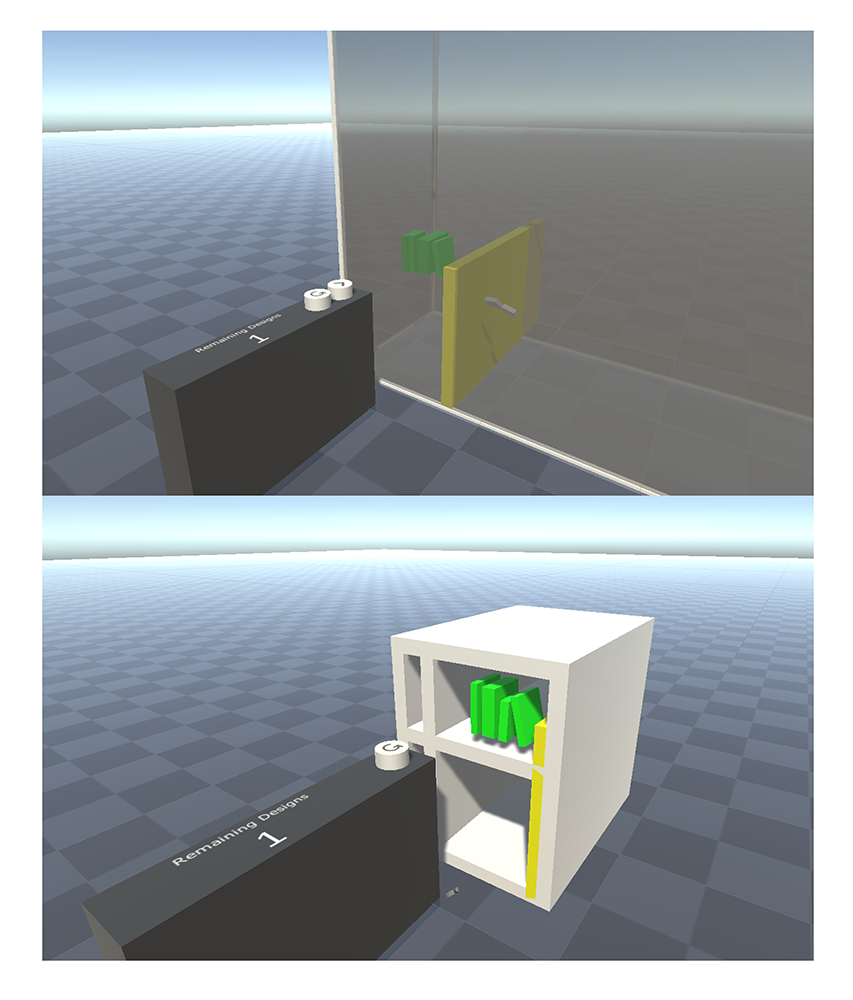

Dual Body Bimanual Coordination in Immersive Environments

DIS 23

Dual Body Bimanual Coordination is an empirical study where users control and interact with the world through two bodies in virtual reality simultaneously. Users select and manipulate objects to perform a coordinated handoff between two bodies under the control of a single user. We investigate people's performance in doing this task, classify strategies for how they chose to coordinate their hands, report on sense of embodiment during the scenario, and share qualitative observations about user body schema.

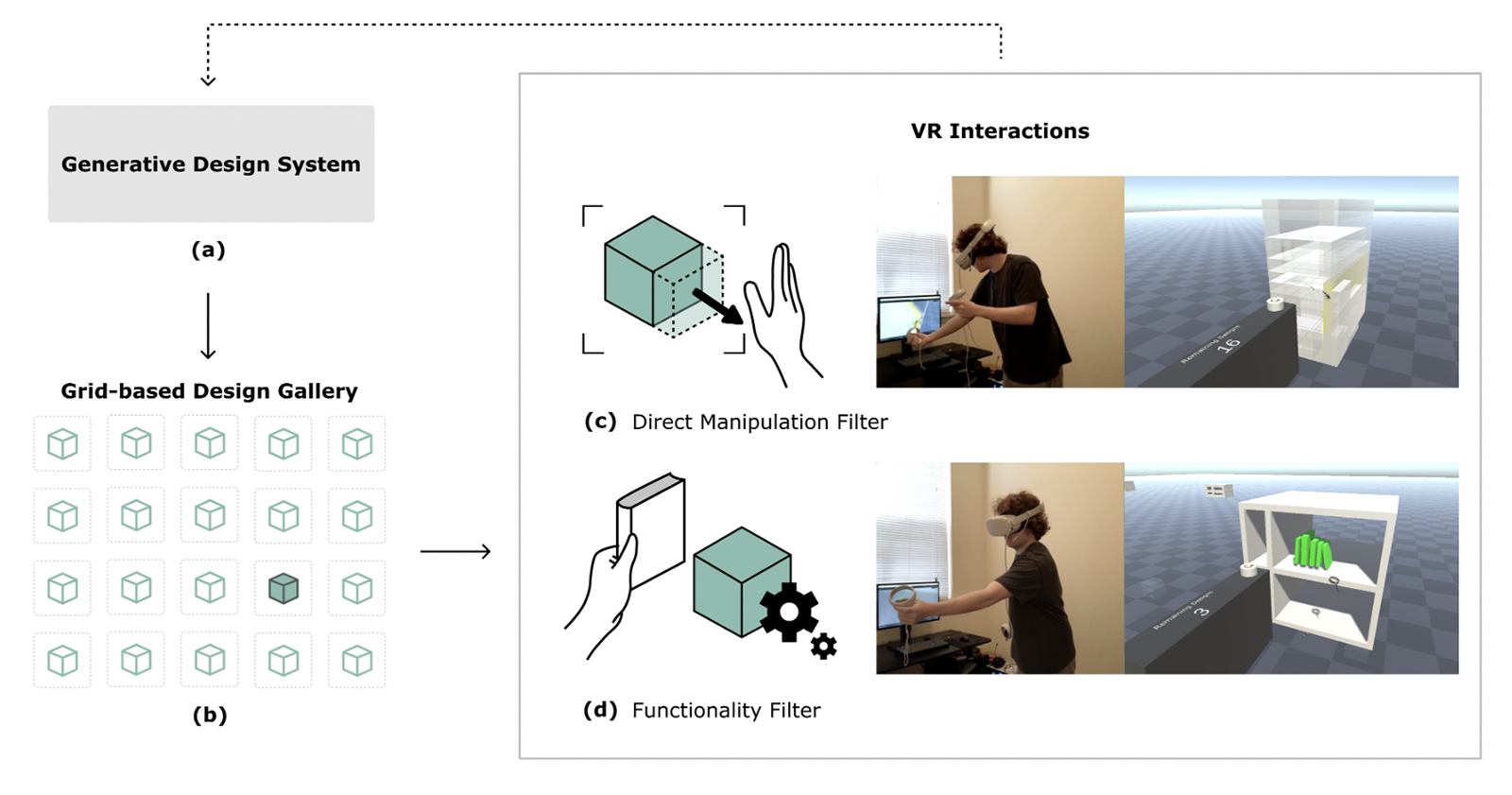

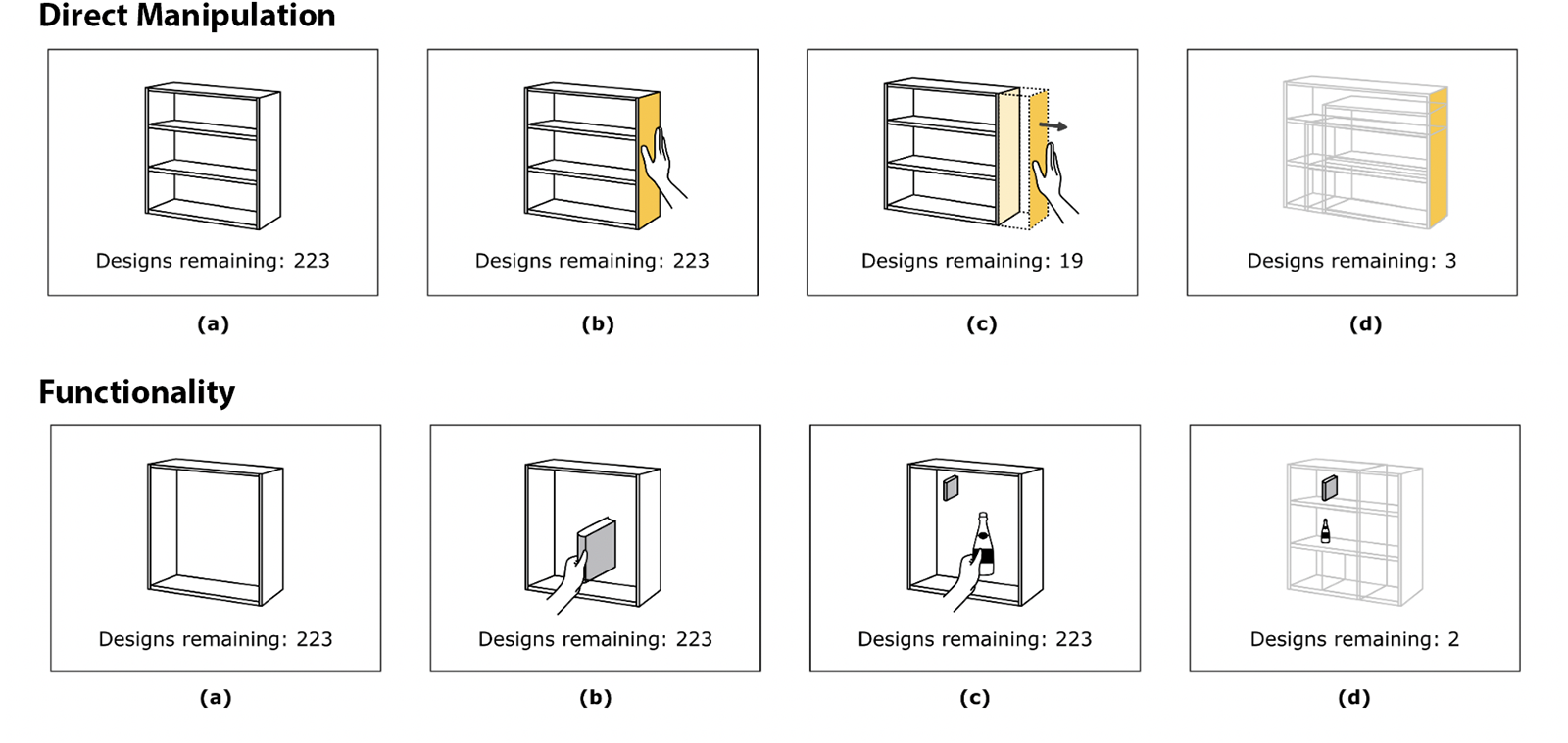

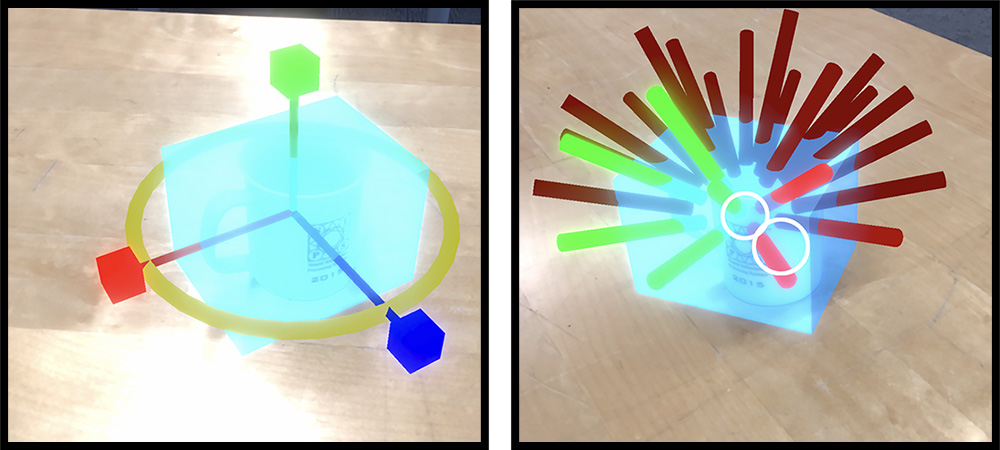

GeneratiVR: Spatial Interactions in Virtual Reality to Explore Generative Design Spaces

CHI 2022 Extended Abstracts

Computational design tools can automatically generate large quantities of viable designs for a given design problem. This raises the challenge of how to enable designers to efficiently and effectively evaluate and select preferred designs from a large set of alternatives. In GeneratiVR, we present two novel interaction techniques to address this challenge, by leveraging Virtual Reality for rich, spatial user input. With these interaction methods, users can directly manipulate designs or demonstrate desired design functionality. The interactions allow users to rapidly filter through an expansive design space to specify or find their preferred designs.

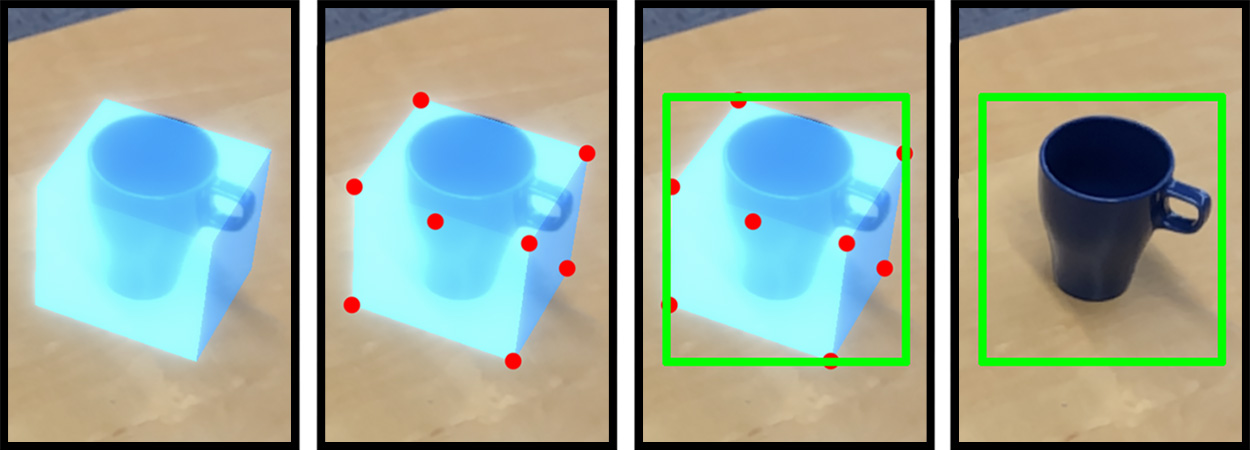

LabelAR: A Spatial Guidance Interface for Fast Computer Vision Image Collection

UIST 2019

Computer vision is applied in an ever expanding range of applications, many of which require custom training data to perform well. We present a novel interface for rapid collection and labeling of training images to improve computer vision based object detectors. LabelAR leverages the spatial tracking capabilities of an AR-enabled camera, allowing users to place persistent bounding volumes that stay centered on real-world objects. The interface then guides the user to move the camera to cover a wide variety of viewpoints. We eliminate the need for post-hoc manual labeling of images by automatically projecting 2D bounding boxes around objects in the images as they are captured from AR-marked viewpoints. In a user study with 12 participants, LabelAR significantly outperforms existing approaches in terms of the trade-off between model performance and collection time.

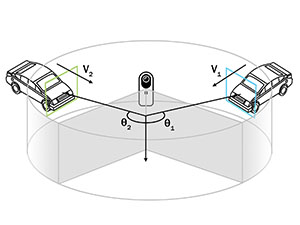

HindSight: Enhancing Spatial Awareness by Sonifying Detected Objects in Real-Time 360-Degree Video

CHI 2018

HindSight increases the environmental awareness of cyclists by warning them of vehicles approaching from outside their visual field. A panoramic camera mounted on a bicycle helmet streams real-time, 360-degree video to a laptop running YOLOv2, a neural object detector designed for real-time use. Detected vehicles are passed through a filter bank to find the most relevant. Resulting vehicles are sonified using bone conduction headphones, giving cyclists added margin to react.

Publications

Industry Experience

I am currently looking for research scientist internship positions. Prior to academia, I enjoyed a career as a gameplay programmer in the video game industry. Designing interactive experiences is still an interest of mine.

Research Scientist, EPIC Group - Microsoft Research

May 2021 - August 2021VR Software Engineer, Jacobs Institute for Design Innovation - UC Berkeley

January 2019 - May 2019Gameplay Programmer - Sony Online Entertainment

June 2008 - October 2010Gameplay Programmer - Zombie Studios

May 2005 - June 2008Classes

My undergraduate coursework included the following upper division classes: Signals and Systems, Convex Optimization, Operating Systems, Internet Architecture, CS Theory and Algorithms, Computational Photography, Machine Learning and Computer Vision.

I've also taken graduate coursework in HCI, AR/VR, Embedded Systems, and Data Visualization.

Hobbies

When I'm not doing research or programming, I like to snowboard, go bouldering, ride my motorcycle, or play D&D. I am also an amatuer speedcuber.